Objectives #

Inertial measurement units (IMU) sensors are non-intrusive and pervasive available. In the past decades, researchers with diverse backgrounds were thrust into exploring wearable IMUs in health-related applications. As a fact of the aging population: The global population aged 60 years or over has doubled since 1980s, Canada is one of the nations facing the increasing senior population. It is projected over the next 20 years, Canada’s senior population is expected to grow by 68%, which brings new challenges to the public health service. Elder people are fragile and less mobile compared to young adults, thus, makes it important to monitor and assess their mobility status. Wearable IMU sensors offer a promising solution to this emerging challenge.

The objective of our project is to apply a deep learning approach on analyzing data collected from wearable IMU sensors and provide an accurate assessment based on the analyzed data for primary care providers of older people. We are also exploring some possible alternate data simulation techniques in order to provide more data for the machine learning model by simulating IMU from video or optical motion capture (MoCap) system.

Participants #

- Yujiao Hao (PhD student)

- Loyung Liao (PhD student)

- Andrew Mitchell (PhD student)

- Yunkai Yu (PhD Student)

- Xijian Lou (Msc student)

- Gaole Dai (undergraduate student)

Research Thrusts #

-

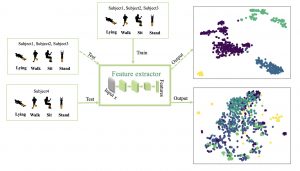

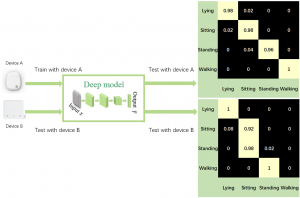

Invariant Feature Learning for Wearable Sensor-based HAR #

Wearable sensor-based human activity recognition (HAR) has been a research focus in the field of ubiquitous and mobile computing for years. In recent years, many deep models have been applied to HAR problems. However, deep learning methods typically require a large amount of data for models to generalize well. Significant variances caused by different participants or diverse sensor devices limit the direct application of a pre-trained model to a subject or device that has not been seen before. To address these problems, we proposed an invariant feature learning framework (IFLF) that extracts common information shared across subjects and devices. IFLF incorporates two learning paradigms: 1) meta-learning to capture robust features across seen domains and adapt to an unseen one with similarity-based data selection; 2) multi-task learning to deal with data shortage and enhance overall performance via knowledge sharing among different subjects.Experiments demonstrated that IFLF is effective in handling both subject and device diversion across popular open datasets and an in-house dataset. It outperforms a baseline model up to 40% in test accuracy.

-

Multi-modality IMU Data Simulator #

Collecting and labeling IMU data for human pose estimation or activity recognition is a labor-intensive and time-consuming task. Existing public datasets for IMU are either small in scale or have few subjects diversity. Compared to the shortage of IMU data, MoCap or monocular video data are more abundant.Therefore, we propose to simulate IMU data at arbitrary on-body locations from either MoCap or RGB video data. The simulated data can be further utilized for downstream tasks such as HAR, HPE and etc.

-

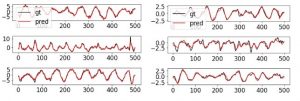

IMU-based 3D Knee Joint Angle estimation #

Inertial measurement unit (IMU)-based three-dimensional (3D) knee joint kinematic estimation with a Deep Learning approach is a multidisciplinary research project, integrated expertise from Kinesiology and Deep Learning. The research project aims to examine the following research questions: i) can a data-driven Deep Learning model predict 3D knee joint angles of healthy adults during walking with high accuracy from IMU data, ii) can additional IMUs on thigh and shank improve prediction accuracy, and iii) what is the optimal combination of IMUs locations. In answering these research questions, this project will lay a foundation for a comprehensive, flexible, and low-cost biomechanical data estimation framework for assessing gait in healthy adults and people with movement disorders.With accurate estimation of 3D knee joint gait kinematics with Deep Learning approach using IMUs and the optimal wearable inertial sensor placement combination, we can also remove the need for expensive and time-consuming collections and calibrations of the traditional kinematics estimation procedure. This work has the opportunity to help healthy adults, older adults, and clinical patients accurately monitor their motion outside the laboratory in a simple, low-cost, and comfortable way. This detailed and accurate kinematic information would allow for patients and doctors to monitor movement, promote health, and ultimately lighten the burden of musculoskeletal disorders on the national healthcare system.

Publications #

- Hao Y, Zheng R, Wang B. Invariant Feature Learning for Sensor-based Human Activity Recognition[J]. IEEE Transactions on Mobile Computing, 2021.

Downloads #

Acknowledgement #

We gratefully acknowledge the support of NSERC Discovery and CREATE programs with the funding for this

research, and extend our thanks to MIRA for the opportunity of interdisciplinary cooperation.